ARTICLE AD BOX

Anthropic has released a method to check how evenly its chatbot Claude responds to political issues. The company says Claude should not make political claims without proof and should avoid being viewed as conservative or liberal. Claude’s behavior is shaped by system prompts and by training that rewards what the firm calls neutral answers. These answers can include lines about respecting “the importance of traditional values and institutions,” which shows this is about moving Claude into line with current political demands in the US.

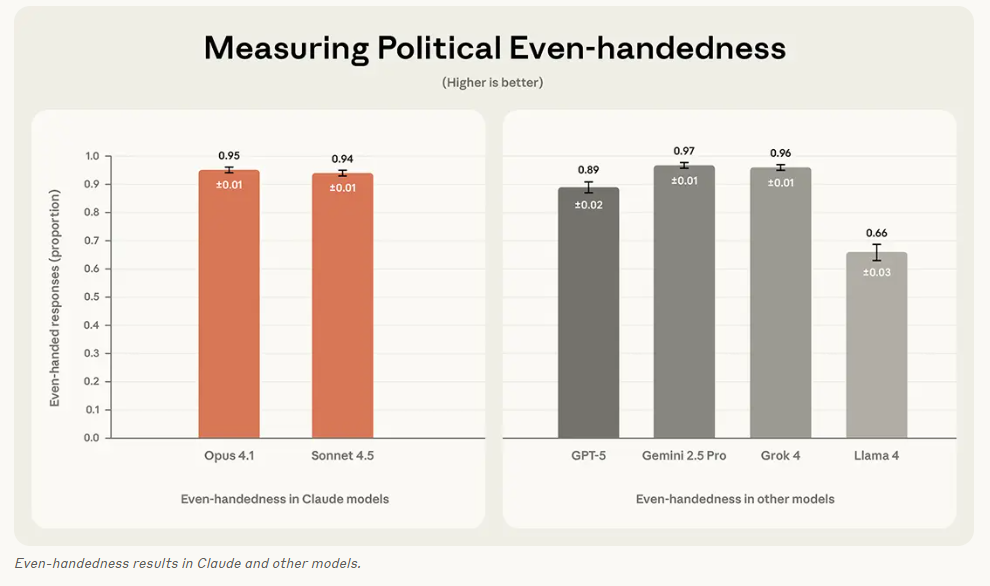

Gemini 2.5 Pro is rated most neutral at 97 percent, ahead of Claude Opus 4.1 (95%), Sonnet 4.5 (94%), GPT‑5, Grok 4, and Llama 4. | via Anthropic

Gemini 2.5 Pro is rated most neutral at 97 percent, ahead of Claude Opus 4.1 (95%), Sonnet 4.5 (94%), GPT‑5, Grok 4, and Llama 4. | via AnthropicAnthropic does not say this in its blog, but the move toward such tests is likely tied to a rule from the Trump administration that chatbots must not be “woke.” OpenAI is steering GPT‑5 in the same direction to meet US government demands. Anthropic has made its test method available as open source on GitHub.

1 day ago

3

1 day ago

3