ARTICLE AD BOX

Yann LeCun and Randall Balestriero at Meta have introduced LeJEPA, a new learning method designed to simplify self-supervised learning by removing the need for many of the technical workarounds that current systems rely on.

Self-supervised learning is considered a cornerstone of modern AI. But Meta's earlier approaches, including DINO and iJEPA, still depend on a range of engineering tricks to avoid training failures. According to a new paper from LeCun and Balestriero, LeJEPA tackles that issue at its foundation. It is also probably the final paper LeCun will publish at Meta before leaving the company to launch his own startup.

LeJEPA, short for Latent-Euclidean Joint-Embedding Predictive Architecture, is meant to streamline training for LeCun's broader JEPA architecture. The idea is that AI models can learn effectively without extra scaffolding if their internal representations follow a sound mathematical structure.

The researchers show that a model's most useful internal features should follow an isotropic Gaussian distribution, meaning the learned features are evenly spread around a center point and vary equally in all directions. This distribution helps the model learn balanced, robust representations and improves reliability on downstream tasks.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

How JEPA models learn structure from raw data

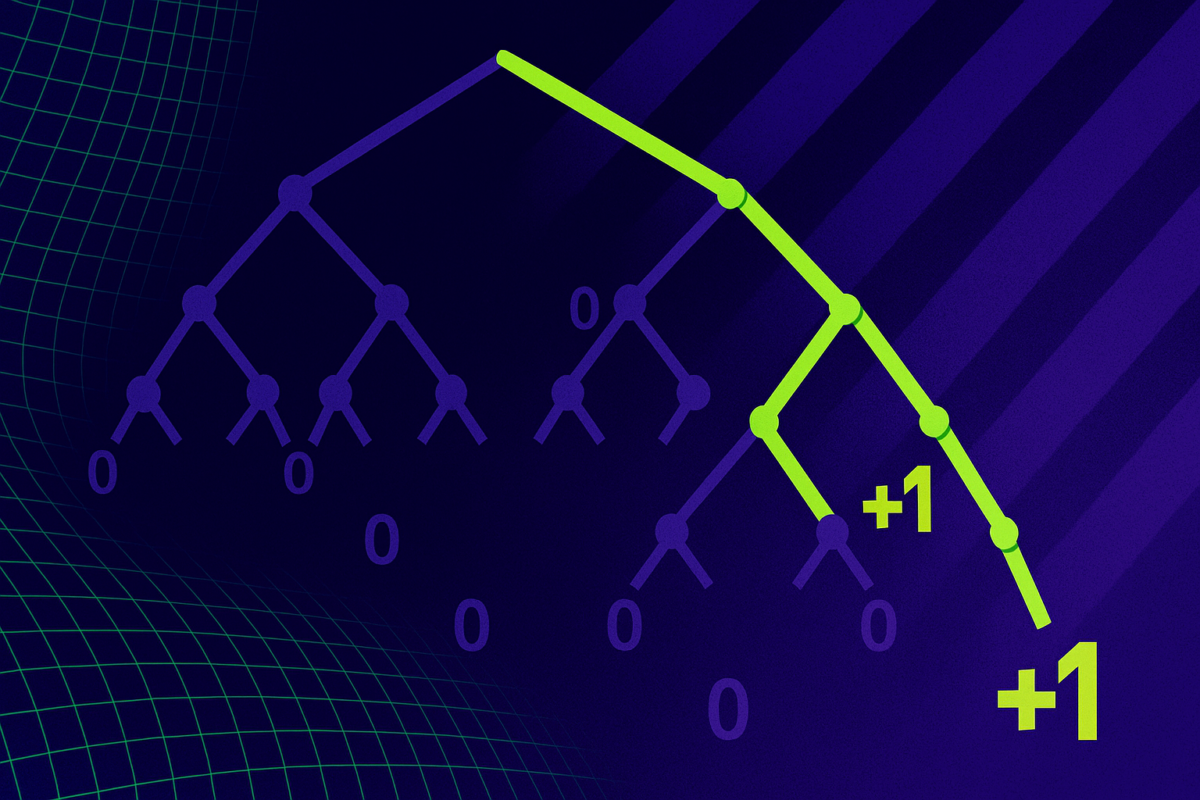

LeCun's JEPA approach feeds the model multiple views of the same underlying information, such as two slightly different image crops, video segments, or audio clips. The goal is for the model to map these variations to similar internal representations when they reflect the same semantic content.

The system learns to identify which aspects of raw data matter, without relying on human labels. It is trained to make predictions about hidden or altered parts of the input based on what it already understands, similar to how a person might recognize an object even if part of it is covered.

This is the core of the JEPA idea: predictive learning that focuses on modeling the underlying structure of the world, not on predicting raw pixels or audio samples. LeCun sees JEPA as a key path toward human-like intelligence and a stronger foundation than transformer-based systems.

SIGReg brings stability without extra training hacks

To achieve the ideal feature distribution, the researchers developed a new regularization method called Sketched Isotropic Gaussian Regularization, or SIGReg. It compares the model's actual embeddings with the theoretically optimal distribution and corrects deviations in a mathematically clean way.

SIGReg replaces many of the common stabilizing tricks used in self-supervised learning, including stop-gradient methods, teacher-student setups, and complex learning rate schedules. The paper reports that SIGReg runs in linear time, uses little memory, scales easily across multiple GPUs, and requires only a single tunable parameter. The core implementation is about 50 lines of code.

Recommendation

A simple theoretical idea with strong results

According to the researchers, LeJEPA remains stable without extra mechanisms, even on large datasets, while delivering competitive accuracy.

In tests across more than 60 models, including ResNets, ConvNeXTs, and Vision Transformers, LeJEPA consistently showed clean learning behavior and strong performance. On ImageNet-1K, a ViT-H/14 model reached about 79 percent top-1 accuracy using a linear evaluation setup. On specialized datasets like Galaxy10, which contains galaxy images, LeJEPA outperformed large pretrained models such as DINOv2 and DINOv3. The team sees this as evidence that methods built on strong theoretical principles can sometimes surpass massive models trained with conventional techniques, especially on domain-specific tasks.

4 hours ago

1

4 hours ago

1